Defining Role

Defining Role

By Jessica Zimmer

Radiology Today

Vol. 26 No. 8 P. 22

How should AI be deployed in radiology?

Increasingly, hospitals and clinics around the world are using AI-powered imaging software to indicate whether a patient needs care and whether to take more extensive medical images. In the United States, the FDA mandates that AI-powered imaging software can only be used for assisted diagnosis and triage. The radiologist treating the patient is legally responsible for acting on mistakes that a program makes. This is because the medical professional bears the responsibility for identifying mistakes and determining the course of treatment.

“The result of the FDA’s regulations is that an AI-powered imaging program can only show you one issue, like a single fracture, at a time,” says Bradley Erickson, MD, PhD, a professor of radiology at the Mayo Clinic in Rochester, Minnesota. “The FDA does not allow an AI-powered program to show additional fractures. The single fracture may not be the first, in terms of location, such as lowest vertebrae in the spine. It also may not be the most significant fracture.”

The presence of a single issue indicates that immediate care and more imaging are needed. The radiologist has to decide what to do next.

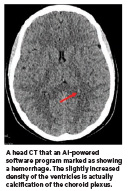

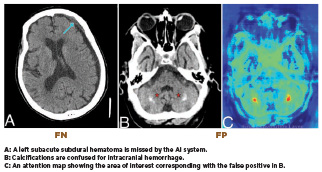

“AI systems are not perfect. They can overcall—mistaking anatomical variations, calcifications, or postoperative changes for pathology like brain hemorrhage— resulting in false positives. They can also under call, particularly missing pulmonary embolism (blockages of blood flow to the lungs caused by blood clots), which are false negatives,” says Matthew Barish, MD, a radiologist, vice chair of informatics for the radiology service line, and chief medical information officer of imaging for Northwell Health in New York City. Because AI-powered software is highly sensitive, errors tend to occur more often in the form of false positives.

Radiologists also use AI-powered software for numerous tasks other than medical imaging, such as summarizing the impressions of a report, responding to patient emails, and asking questions about medical phenomena from research databases, says Judy Wawira Gichoya, MD. Gichoya is an associate professor in the department of radiology and imaging sciences at Emory University’s School of Medicine and codirector of Emory University’s Healthcare AI Innovation and Translational Informatics Lab.

For nonimaging tasks, the radiologist has the final say on what a report states, what message a patient receives, and how information from a database is used. “Because we always use the human in the loop, then the human tends to be the person who’s responsible,” Gichoya says.

This is also true in Canada, where AI is seen as a helper, not a replacement for radiologists. “One reason why is that vendors are not always transparent about the utility of AI tools. Things that may work well initially may not do so after several months. We keep the channels of communication open, reporting all incidents so concerns get fixed,” says David Koff, MD. Koff is professor emeritus of radiology at McMaster University in Hamilton, Ontario, Canada, and founder and director of Medical Imaging Informatics Research Centre at McMaster University.

Technological Development

Aidoc, a developer of AI-powered medical imaging solutions, has designed the aiOS platform to deliver over 30 FDAcleared AI solutions for different types of diagnosis. The solutions are paths with specific purposes. These include the detection and triage of cervical spine fractures and life-threatening pulmonary embolisms, care coordination of stroke, and detection, measurement, and comparison of lung nodules.

Aidoc is based in Tel Aviv and has offices in New York and Barcelona. Its software is used in over 150 health systems around the world. Most of the health care providers that purchase Aidoc’s programs are based in the United States. The company also has users in Europe, Australia, South Africa, Israel, and Brazil. Aidoc’s customers include Northwell Health, Yale New Haven Health, and Sutter Health. Aidoc’s FDA-approved algorithms include tools that support radiologists in triaging acute findings, measuring significant ones, and streamlining care coordination with downstream teams.

Going forward, Aidoc expects nextgeneration cloud graphics processing units (GPUs) to increase both training and inference throughput, defined as the amount of material passing through a system. “Current GPUs are sufficient. Better ones gradually improve the speed and effectiveness of the training experiments and, ultimately, the solutions. It’s a never-ending, gradual process,” says Elad Walach, cofounder and CEO of Aidoc.

Israel’s health care plans, called integrated health funds, and the widespread use of EHRs can speed pilots and prospective validation of software faster than such processes in other countries. “US/Canadian deployments often move through larger, multisite systems and stricter productization gates,” says Junyang Cai, a PhD student in operations management at the department of data and decision sciences at Technion University in Haifa, Israel. “The goals are aligned—safety, equity, and measurable clinical benefit— but Israel’s scale and integration can shorten the learn-iterate cycle before broader North American rollouts.”

The process of designing AI-powered imaging software is naturally collaborative. Developers work on model and product design. Radiology departments work on workflow design, validation, and quality assurance. Regulators, such as government agencies, update pathways.

In Israel, Sheba Medical Center’s Accelerate, Redesign, Collaborate Center in Ramat Gan brings together startups, clinicians, and researchers for rapid pilots. Clalit Health Service’s Research Institute, also in Ramat Gan, supports large-scale, data-driven evaluation. This cooperation drives improvements that later reach US hospitals, Cai says.

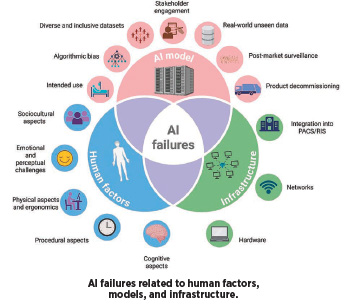

A tight loop like the one above is also key for mitigating AI errors, says Nikolaos Stogiannos, MSc. Stogiannos is studying the use of AI as an honorary research fellow at the School of Health & Medical Sciences at City St George’s, University of London. “AI failures can be largely divided into three distinct categories, those related to the AI model itself, those occurring due to infrastructure deficiencies, and those related to human factors,” Stogiannos says. “One of the most important factors when developing AI algorithms is the quality of training and testing data. That means that the data used for development purposes must always be diverse and inclusive, avoiding bias related to age, gender, ethnicity, sex, and socioeconomic status.”

Software developers should also engage in external testing, using benchmarking datasets across multiple sites. Further, they should offer clear intended use statements for their tools and engage key stakeholders from the early phases of AI models’ lifecycle. Stogiannos says patients should be actively involved in this process.

Explaining AI

Across the United States, hospitals are at the beginning stage of sharing how AI is used in radiology. “Northwell is evaluating how best to communicate about AI,” Barish says. “Is it in our interest to highlight our robust AI program in marketing? We’re also considering the approach: Should we personalize the message— explaining to each patient how AI was used in their care—or offer a general overview, such as, ‘This is how Northwell uses AI to enhance patient outcomes.’”

Radiologists often don’t interact directly with patients. “The exception is mammography and osteoporosis imaging. In these scenarios, a radiologist typically engages with the patient to explain the findings,” Barish says.

In Canada, radiologists also are not explaining AI’s place in treatment, yet. “That’s partly because AI has been around for a long time,” Koff says. “We see it as just one more tool. We don’t explain every tool to patients.”

Issues that complicate explaining AI include the fact that some patients are not conscious, stable, or lucid enough to discuss the topic. Some may not want to engage in discussions about complex technology involved in their care. Health care providers may also have difficulty explaining the “black box” inside of software programs, meaning how the AI algorithm accomplishes a task. This is proprietary information that software developers usually do not share.

In addition, there is the danger that patients could misperceive AI’s role. AIpowered software for radiology does not take the place of a radiologist. It does not use inaccurate data available on the internet. It also does not encourage harmful actions like suicide.

A national poll conducted in the United States between April 2025 and August 2025 found that AI has a broadly held public trust problem, according to Civiqs, an Oakland, California-based public opinion research firm. Americans’ concerns with AI went beyond a single company or application. The primary reasons they viewed AI negatively were that they believed it produced inaccurate information, is susceptible to malicious use by bad actors, and is not subject to sufficient control and regulation.

Some of the public’s mistrust of AI is due to direct engagement with widely available AI tools like ChatGPT. Inaccurate or slanted media coverage that portrays AI as replacing people in the job market is another concern. Patients are also disadvantaged by the fact that there are not many clear, unbiased, and patient-directed sources of information that explain AI’s role in medical treatment.

Work in Progress

Another question is how health care providers want to approach acknowledging the two primary types of AI, generative AI and nongenerative AI. Generative AI produces text and images with user input. Nongenerative AI analyzes information and makes predictions.

Gichoya says Emory University Hospital uses ambient AI, a generative AI tool, for documentation during patient visits and tasks such as inbox management and patient chat search. This differs from commonly used AI in medical imaging software, which is nongenerative and does not “hallucinate” like ChatGPT.

As radiologists determine how they will involve patients in improving AI-powered tools, they are also asking whether the effort is worth the expense. Erickson says AI is not a money saver.

“I read the case just as I used to, but I also read what the AI generates,” Erickson says. “So, I’m doing more work. AI can get things wrong; for example, a nutrient canal was read as a fracture. So, I am also trying to figure out why the AI made the error and correct that issue.”

Koff echoes this statement. He adds that radiologists must keep a trail of how and why they used AI tools. “We make sure this is monitored. If a mistake occurs, we want to determine who relied on it and what the consequences of that were,” Koff says.

Still, radiologists are likely to continue using AI tools, both to help software developers improve existing tools and to figure out where people make mistakes and AI tools do not.

“We’ve found that while the AI tool remains unchanged, unless updated, the radiologist improves with continued use,” Barish says. “This is because the tool relies on a fixed algorithm, but the radiologist gains experience and insight based on the feedback AI provides.”

It is important, however, for radiology departments to discourage radiologists from over-relying on AI tools. “When the AI tool does its job very well, a radiologist starts to get biased and overly rely on AI,” Barish says. “That is dangerous because it could lead to them acting on a false positive. The radiologist, as the person who is using the tool, needs to consider all factors to make a correct diagnosis.”

— Jessica Zimmer is a freelance writer living in northern California. She specializes in covering AI and legal matters.