AI Improves Chest X-Ray Interpretation

AI can detect clinically meaningful chest X-ray findings as effectively as experienced radiologists, according to a study recently published in the journal Radiology. Researchers say their findings, based on deep learning, could provide a valuable resource for the future development of AI chest radiography models.

Chest radiography, one of the most common imaging exams worldwide, is performed to help diagnose the source of symptoms such as cough, fever, and pain. Despite its popularity, the exam has limitations.

“We’ve found that there is a lot of subjectivity in chest X-ray interpretation,” says study coauthor Shravya Shetty, an engineering lead at Google Health in Palo Alto, California. “Significant interreader variability and suboptimal sensitivity for the detection of important clinical findings can limit its effectiveness.”

Deep learning has the potential to improve chest X-ray interpretation, but it has limitations. For example, results derived from one group of patients cannot always be generalized to the population at large.

Researchers at Google Health developed deep learning models for chest X-ray interpretation that overcome some of these limitations. They used two large data sets to develop, train, and test the models. The first data set consisted of more than 750,000 images from five hospitals in India, while the second set included 112,120 images made publicly available by the National Institutes of Health (NIH).

A panel of radiologists convened to create the reference standards for certain abnormalities visible on chest X-rays used to train the models.

“Chest X-ray interpretation is often a qualitative assessment, which is problematic from a deep learning standpoint,” says Daniel Tse, MD, product manager at Google Health. “By using a large, diverse set of chest X-ray data and panel-based adjudication, we were able to produce more reliable evaluation for the models.”

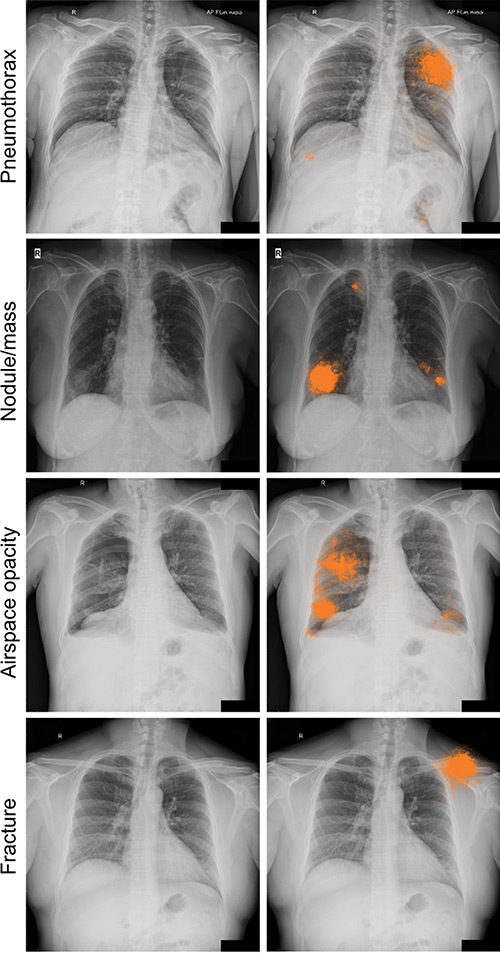

Tests of the deep learning models showed that they performed on par with radiologists in detecting four findings on frontal chest X-rays, including fractures, nodules or masses, opacity, and pneumothorax.

Radiologist adjudication led to increased expert consensus of the labels used for model tuning and performance evaluation. The overall consensus increased from approximately 41% after the initial read to almost 97% after adjudication.

The rigorous model evaluation techniques have advantages over existing methods, researchers say. By beginning with a broad, hospital-based clinical image set, then sampling a diverse set of cases and reporting population-adjusted metrics, the results are more representative and comparable. Additionally, radiologist adjudication provides a reference standard that can be both more sensitive and more consistent than other methods.

“We believe the data sampling used in this work helps to more accurately represent the incidence for these conditions,” Tse says. “Moving forward, deep learning can provide a useful resource to facilitate the continued development of clinically useful AI models for chest radiography.”

The research team has made the expert-adjudicated labels for thousands of NIH images available for use by other researchers at https://cloud.google.com/healthcare/docs/resources/public-datasets/nih-chest#additional_labels.

“The NIH database is a very important resource, but the current labels are noisy, and this makes it hard to interpret the results published on this data,” Shetty says. “We hope that the release of our labels will help further research in this field.”

Deep Learning Assists in Detecting Lung Cancers

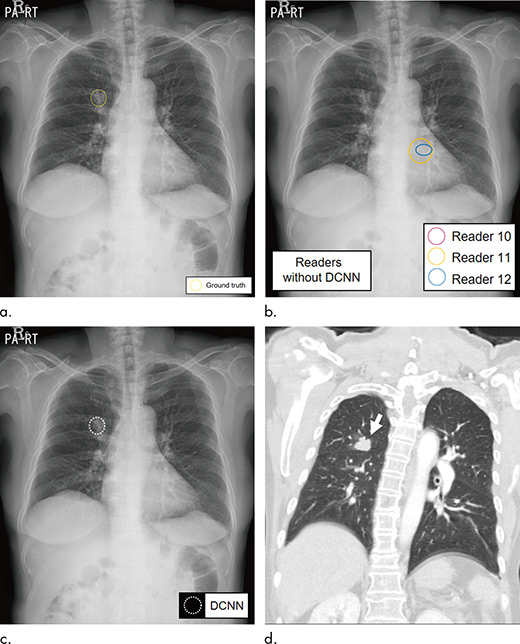

Radiologists assisted by deep learning–based software were better able to detect malignant lung cancers on chest X-rays, according to research recently published in the journal Radiology.

“The average sensitivity of radiologists was improved by 5.2% when they re-reviewed X-rays with the deep learning software,” says Byoung Wook Choi, MD, PhD, a professor at Yonsei University College of Medicine, and cardiothoracic radiologist in the department of radiology in the Yonsei University Health System in Seoul, Korea. “At the same time, the number of false-positive findings per image was reduced.”

Choi says the characteristics of lung lesions including size, density, and location make the detection of lung nodules on chest X-rays more challenging. However, machine learning methods, including the implementation of deep convolutional neural networks (DCNN), have helped to improve detection.

A DCNN, modeled after brain structure, employs multiple hidden layers and patterns to classify images.

In this retrospective study, radiologists randomly selected a total of 800 X-rays from four participating centers, including 200 normal chest scans and 600 with at least one malignant lung nodule confirmed by CT imaging or pathological examination (50 normal and 150 with cancer from each institution). There were 704 confirmed malignant nodules in the lung cancer X-rays (78.6% primary lung cancers and 21.4% metastases). The majority (56.1%) of the nodules were between 1 cm and 2 cm, while 43.9% were between 2 cm and 3 cm.

A second group of radiologists, including three from each institution, interpreted the selected chest X-rays with and without cancerous nodules. The readers then reread the same X-rays with the assistance of DCNN software, which was trained to detect lung nodules.

The average sensitivity, or the ability to detect an existing cancer, improved significantly from 65.1% for radiologists reading alone to 70.3% when aided by the DCNN software. The number of false-positives—incorrectly reporting that cancer is present—per X-ray declined from 0.2 for radiologists alone to 0.18 with the help of the software.

“Computer-aided detection software to detect lung nodules has not been widely accepted and utilized because of high false-positive rates, even though it provides relatively high sensitivity,” Choi says. “DCNN may be a solution to reduce the number of false-positives.”

— Source: RSNA